[ad_1]

For extra crisp and insightful enterprise and financial information, subscribe to

The Each day Upside e-newsletter.

It is utterly free and we assure you may be taught one thing new day-after-day.

Except you’ve got been residing off the grid someplace within the Azores, you’ve got most likely heard of somewhat factor known as ChatGPT.

It is a very spectacular chatbot that was created by an organization known as OpenAI and launched to the general public in November that makes use of generative AI. By ingesting large quantities of textual content written by precise people, ChatGPT can create a wide variety of texts, from track lyrics to résumés to varsity essays.

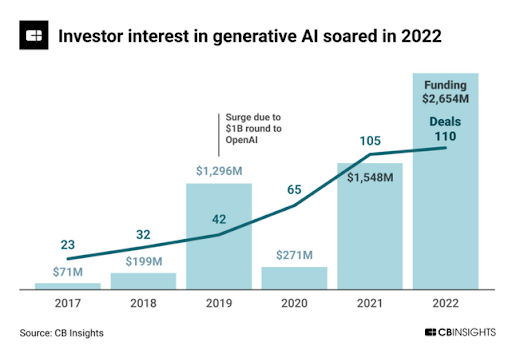

ChatGPT kicked off a maelstrom of pleasure and never simply amongst customers, but in addition Huge Tech firms and traders who poured cash into any firm that had the phrase “AI” anyplace on its pitch deck. However generative AI instruments able to whisking up textual content, photos, and audio have additionally prompted widespread hand-wringing about mass plagiarism and the obliteration of inventive industries. (On the Each day Upside, “higher than bots” is our new rallying cry.)

For at this time’s function we’ll see whether or not AI really lives as much as the hype, and what authorized guardrails are there to steer its progress. So sit again, calm down, and if at any level you hear a voice saying “I am afraid I can not try this, Dave” please stay calm.

Commercialization Stations

When generative AI instruments had been simply getting used to create foolish memes, i.e. yesterday, the talk about robots and our future appeared largely theoretical. As soon as it grew to become apparent that this was a deeply commercializable instrument was when issues began to get tough.

ChatGPT upped the ante as a result of it kicked off an arms race between Microsoft and Google. Microsoft introduced in January it might make investments $10 billion in OpenAI, and some weeks later it introduced a brand new model of its search engine Bing which, as a substitute of exhibiting customers a web page of search outcomes, would give written solutions to questions utilizing ChatGPT’s tech.

The information despatched Alphabet, which has never confronted severe competitors in search, like, ever, scrambling earlier than saying it might create its personal generative AI search instrument, known as Bard. Sadly, on Bard’s debut, it made an error in one among its solutions and traders responded by wiping $120 billion off Google’s market worth in a matter of hours.

Google’s knee-jerk response was short-sighted however comprehensible. ChatGPT’s in a single day recognition sparked an ongoing onslaught of articles suggesting that generative AI is the largest factor to occur to the web since social media, and particularly that it might perpetually change the way in which we search – and the verb we use once we achieve this. So certain, Google seen.

Because it seems, Google needn’t have rushed. The brand new generative-AI-powered Bing turned out to be simply as fallible as Bard, but in addition seemingly borderline sociopathic. In a single instance, it insisted that the 12 months was nonetheless 2022. In one other, it went completely off the rails and instructed a New York Instances journalist to depart his spouse.

Information web sites that deployed generative textual content instruments have additionally realized the arduous approach that errors occur, significantly in terms of math. CNET revealed 77 tales utilizing a generative AI textual content instrument and discovered errors in half of them.

So what is the level of a search engine you must fact-check, and is all of the hype even remotely justified?

Dr. Sandra Wachter, an knowledgeable in AI and regulation on the Oxford Web Institute, instructed The Each day Upside generative AI’s analysis skills have been tremendously overestimated.

“The massive hope is that it’ll lower analysis time in half or is a instrument for locating helpful info faster… It isn’t ready to try this now, I am not even certain it is going to have the ability to try this in some unspecified time in the future,” she stated.

She added ChatGPT’s confident tone amplifies the issue. It is a know-it-all that does not know what it does not know.

“The problem is the algorithm does not know what it’s doing, however it’s tremendous satisfied that it is aware of what it is doing,” she stated. “I want I had that sort of confidence after I’m speaking about stuff I really know one thing about… In case your requirements aren’t that prime, I believe it is a good instrument to mess around with, however you should not belief it.”

What Is Artwork? Reply: Copyrightable

Whereas ChatGPT despatched an existential shiver down the backbone of journalists and copywriters, artists had already confronted the issues of accessible, commercializable AI within the type of picture mills resembling Steady Diffusion and Dall-E. Like ChatGPT, these instruments work by scraping hoards of photos — a lot of that are copyrighted.

Authorized strains are already being drawn within the sand round AI-generated photos. Getty filed a lawsuit in opposition to Stability AI (the corporate behind Steady Diffusion) in February for utilizing its footage with out permission.

Comparable authorized battles may play out between search engine house owners and publishers. Huge Tech and journalism have already got a reasonably poisonous codependent relationship, with information firms feeling held hostage to the back-end algorithm tweaks that change how readers entry information on-line. Now generative AI search engines like google may scrape textual content from information websites and repackage it for customers, that means much less site visitors for these websites — ergo much less promoting or subscription {dollars}.

The query of generative AI copyright is two-pronged, because it considerations each enter and output:

- It is attainable that to get the enter information they need, AI firms will likely be pressured to acquire licenses. Within the EU there are exemptions to copyright legal guidelines that allow information scraping, however just for analysis functions. “The query of is that this analysis or is that this not, that will likely be a combating level,” Wachter stated.

- As soon as the AI instrument has spat out a piece of textual content or a picture, who owns the rights to that? In February final 12 months the US Copyright Workplace dominated an AI cannot copyright a murals it made, however who can copyright it stays an open query.

Knowledge safety legislation may also apply to generative AI makers, however Wachter harassed there is no such thing as a new authorized framework tailor-made for the know-how. The US revealed its blueprint for an AI Invoice of Rights final 12 months, however that was in October, a month earlier than ChatGPT made its dazzling debut. The EU additionally has an upcoming AI Act, however once more, it simply did not see generative AI coming to the fore so quick.

“The brand new regulation will do subsequent to nothing to manipulate this,” Wachter stated. One part of the present tips that might doubtlessly adapt to generative AI instruments is a set of recent requirements of transparency and accountability for chatbots. The thought is to make it clear to customers after they’re speaking to a cheery customer support bot, reasonably than a world-weary customer support worker.

However even when firms had been pressured to watermark AI works, Wachter does not believe that’d be helpful. “That is a cat-and-mouse sport, as a result of in some unspecified time in the future there will likely be a know-how that helps you take away that watermark,” she stated.

So What Can We Do?

Good or unhealthy, higher or worse, generative AI is right here now and it is not going away. Wachter thinks there’s a three-pronged strategy wanted to ensure ChatGPT and its brethren do not turn into garrulous bulls within the web’s china store:

- Schooling: though faculties have blocked entry to ChatGPT to move off college students from getting it to jot down their homework for them (as have main banks together with JP Morgan and Citibank) Wachter thinks youngsters and grownups alike must mess around with AI instruments to allow them to turn into acquainted with their strengths and limitations.

- Transparency: regardless of the potential of future AI watermark removals, somewhat digital badge from the developer saying “an AI made this” could possibly be a helpful step towards individuals treating its output with a extra important eye.

- Accountability: the spam and misinformation potential of ChatGPT-style instruments is big, so Wachter envisions a future the place platforms must tightly management the unfold of AI-generated content material.

Regulation by no means strikes as quick as know-how, however the furor round generative AIs might immediate lawmakers to plant their flag.

“The primary factor that I actually need to cease listening to is simply the panic round it [AI],” Wachter instructed The Each day Upside, including: “It is a enjoyable know-how that can be utilized for a lot of functions, and but we solely give attention to the horrendous stuff that can deliver doomsday.”

She stated wanting plagiarizing artists’ work, the tech may legitimately be utilized in fascinating methods within the artwork world, which has all the time managed to include new applied sciences as instruments.

“If Monet had had a laptop computer again then he would have used it,” she stated. “We’re betting he would have been a fan of Photoshop’s blur instrument.

Disclaimer: this text was written by a human journalist possessed of free will (arguably), fallibility (allegedly), and self-awareness (normally).

[ad_2]